Computer programming

| Part of a series on |

| Software development |

|---|

Computer programming or coding is the composition of sequences of instructions, called programs, that computers can follow to perform tasks.[1][2] It involves designing and implementing algorithms, step-by-step specifications of procedures, by writing code in one or more programming languages. Programmers typically use high-level programming languages that are more easily intelligible to humans than machine code, which is directly executed by the central processing unit. Proficient programming usually requires expertise in several different subjects, including knowledge of the application domain, details of programming languages and generic code libraries, specialized algorithms, and formal logic.

Auxiliary tasks accompanying and related to programming include analyzing requirements, testing, debugging (investigating and fixing problems), implementation of build systems, and management of derived artifacts, such as programs' machine code. While these are sometimes considered programming, often the term software development is used for this larger overall process – with the terms programming, implementation, and coding reserved for the writing and editing of code per se. Sometimes software development is known as software engineering, especially when it employs formal methods or follows an engineering design process.

History

[edit]

Programmable devices have existed for centuries. As early as the 9th century, a programmable music sequencer was invented by the Persian Banu Musa brothers, who described an automated mechanical flute player in the Book of Ingenious Devices.[3][4] In 1206, the Arab engineer Al-Jazari invented a programmable drum machine where a musical mechanical automaton could be made to play different rhythms and drum patterns, via pegs and cams.[5][6] In 1801, the Jacquard loom could produce entirely different weaves by changing the "program" – a series of pasteboard cards with holes punched in them.

Code-breaking algorithms have also existed for centuries. In the 9th century, the Arab mathematician Al-Kindi described a cryptographic algorithm for deciphering encrypted code, in A Manuscript on Deciphering Cryptographic Messages. He gave the first description of cryptanalysis by frequency analysis, the earliest code-breaking algorithm.[7]

The first computer program is generally dated to 1843 when mathematician Ada Lovelace published an algorithm to calculate a sequence of Bernoulli numbers, intended to be carried out by Charles Babbage's Analytical Engine.[8] However, Charles Babbage himself had written a program for the AE in 1837.[9][10]

In the 1880s, Herman Hollerith invented the concept of storing data in machine-readable form.[11] Later a control panel (plug board) added to his 1906 Type I Tabulator allowed it to be programmed for different jobs, and by the late 1940s, unit record equipment such as the IBM 602 and IBM 604, were programmed by control panels in a similar way, as were the first electronic computers. However, with the concept of the stored-program computer introduced in 1949, both programs and data were stored and manipulated in the same way in computer memory.[12]

Machine language

[edit]Machine code was the language of early programs, written in the instruction set of the particular machine, often in binary notation. Assembly languages were soon developed that let the programmer specify instructions in a text format (e.g., ADD X, TOTAL), with abbreviations for each operation code and meaningful names for specifying addresses. However, because an assembly language is little more than a different notation for a machine language, two machines with different instruction sets also have different assembly languages.

Compiler languages

[edit]High-level languages made the process of developing a program simpler and more understandable, and less bound to the underlying hardware. The first compiler related tool, the A-0 System, was developed in 1952[13] by Grace Hopper, who also coined the term 'compiler'.[14][15] FORTRAN, the first widely used high-level language to have a functional implementation, came out in 1957,[16] and many other languages were soon developed—in particular, COBOL aimed at commercial data processing, and Lisp for computer research.

These compiled languages allow the programmer to write programs in terms that are syntactically richer, and more capable of abstracting the code, making it easy to target varying machine instruction sets via compilation declarations and heuristics. Compilers harnessed the power of computers to make programming easier[16] by allowing programmers to specify calculations by entering a formula using infix notation.

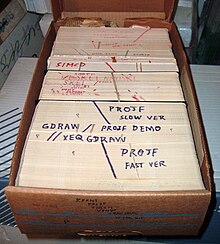

Source code entry

[edit]Programs were mostly entered using punched cards or paper tape. By the late 1960s, data storage devices and computer terminals became inexpensive enough that programs could be created by typing directly into the computers. Text editors were also developed that allowed changes and corrections to be made much more easily than with punched cards.

Modern programming

[edit]Quality requirements

[edit]Whatever the approach to development may be, the final program must satisfy some fundamental properties. The following properties are among the most important:[17] [18]

- Reliability: how often the results of a program are correct. This depends on conceptual correctness of algorithms and minimization of programming mistakes, such as mistakes in resource management (e.g., buffer overflows and race conditions) and logic errors (such as division by zero or off-by-one errors).

- Robustness: how well a program anticipates problems due to errors (not bugs). This includes situations such as incorrect, inappropriate or corrupt data, unavailability of needed resources such as memory, operating system services, and network connections, user error, and unexpected power outages.

- Usability: the ergonomics of a program: the ease with which a person can use the program for its intended purpose or in some cases even unanticipated purposes. Such issues can make or break its success even regardless of other issues. This involves a wide range of textual, graphical, and sometimes hardware elements that improve the clarity, intuitiveness, cohesiveness, and completeness of a program's user interface.

- Portability: the range of computer hardware and operating system platforms on which the source code of a program can be compiled/interpreted and run. This depends on differences in the programming facilities provided by the different platforms, including hardware and operating system resources, expected behavior of the hardware and operating system, and availability of platform-specific compilers (and sometimes libraries) for the language of the source code.

- Maintainability: the ease with which a program can be modified by its present or future developers in order to make improvements or to customize, fix bugs and security holes, or adapt it to new environments. Good practices[19] during initial development make the difference in this regard. This quality may not be directly apparent to the end user but it can significantly affect the fate of a program over the long term.

- Efficiency/performance: Measure of system resources a program consumes (processor time, memory space, slow devices such as disks, network bandwidth and to some extent even user interaction): the less, the better. This also includes careful management of resources, for example cleaning up temporary files and eliminating memory leaks. This is often discussed under the shadow of a chosen programming language. Although the language certainly affects performance, even slower languages, such as Python, can execute programs instantly from a human perspective. Speed, resource usage, and performance are important for programs that bottleneck the system, but efficient use of programmer time is also important and is related to cost: more hardware may be cheaper.

Using automated tests and fitness functions can help to maintain some of the aforementioned attributes. [20]

Readability of source code

[edit]In computer programming, readability refers to the ease with which a human reader can comprehend the purpose, control flow, and operation of source code. It affects the aspects of quality above, including portability, usability and most importantly maintainability.

Readability is important because programmers spend the majority of their time reading, trying to understand, reusing, and modifying existing source code, rather than writing new source code. Unreadable code often leads to bugs, inefficiencies, and duplicated code. A study found that a few simple readability transformations made code shorter and drastically reduced the time to understand it.[21]

Following a consistent programming style often helps readability. However, readability is more than just programming style. Many factors, having little or nothing to do with the ability of the computer to efficiently compile and execute the code, contribute to readability.[22] Some of these factors include:

- Different indent styles (whitespace)

- Comments

- Decomposition

- Naming conventions for objects (such as variables, classes, functions, procedures, etc.)

The presentation aspects of this (such as indents, line breaks, color highlighting, and so on) are often handled by the source code editor, but the content aspects reflect the programmer's talent and skills.

Various visual programming languages have also been developed with the intent to resolve readability concerns by adopting non-traditional approaches to code structure and display. Integrated development environments (IDEs) aim to integrate all such help. Techniques like Code refactoring can enhance readability.

Algorithmic complexity

[edit]The academic field and the engineering practice of computer programming are concerned with discovering and implementing the most efficient algorithms for a given class of problems. For this purpose, algorithms are classified into orders using Big O notation, which expresses resource use—such as execution time or memory consumption—in terms of the size of an input. Expert programmers are familiar with a variety of well-established algorithms and their respective complexities and use this knowledge to choose algorithms that are best suited to the circumstances.

Methodologies

[edit]The first step in most formal software development processes is requirements analysis, followed by testing to determine value modeling, implementation, and failure elimination (debugging). There exist a lot of different approaches for each of those tasks. One approach popular for requirements analysis is Use Case analysis. Many programmers use forms of Agile software development where the various stages of formal software development are more integrated together into short cycles that take a few weeks rather than years. There are many approaches to the Software development process.

Popular modeling techniques include Object-Oriented Analysis and Design (OOAD) and Model-Driven Architecture (MDA). The Unified Modeling Language (UML) is a notation used for both the OOAD and MDA.

A similar technique used for database design is Entity-Relationship Modeling (ER Modeling).

Implementation techniques include imperative languages (object-oriented or procedural), functional languages, and logic programming languages.

Measuring language usage

[edit]It is very difficult to determine what are the most popular modern programming languages. Methods of measuring programming language popularity include: counting the number of job advertisements that mention the language,[23] the number of books sold and courses teaching the language (this overestimates the importance of newer languages), and estimates of the number of existing lines of code written in the language (this underestimates the number of users of business languages such as COBOL).

Some languages are very popular for particular kinds of applications, while some languages are regularly used to write many different kinds of applications. For example, COBOL is still strong in corporate data centers[24] often on large mainframe computers, Fortran in engineering applications, scripting languages in Web development, and C in embedded software. Many applications use a mix of several languages in their construction and use. New languages are generally designed around the syntax of a prior language with new functionality added, (for example C++ adds object-orientation to C, and Java adds memory management and bytecode to C++, but as a result, loses efficiency and the ability for low-level manipulation).

Debugging

[edit]

Debugging is a very important task in the software development process since having defects in a program can have significant consequences for its users. Some languages are more prone to some kinds of faults because their specification does not require compilers to perform as much checking as other languages. Use of a static code analysis tool can help detect some possible problems. Normally the first step in debugging is to attempt to reproduce the problem. This can be a non-trivial task, for example as with parallel processes or some unusual software bugs. Also, specific user environment and usage history can make it difficult to reproduce the problem.

After the bug is reproduced, the input of the program may need to be simplified to make it easier to debug. For example, when a bug in a compiler can make it crash when parsing some large source file, a simplification of the test case that results in only few lines from the original source file can be sufficient to reproduce the same crash. Trial-and-error/divide-and-conquer is needed: the programmer will try to remove some parts of the original test case and check if the problem still exists. When debugging the problem in a GUI, the programmer can try to skip some user interaction from the original problem description and check if the remaining actions are sufficient for bugs to appear. Scripting and breakpointing are also part of this process.

Debugging is often done with IDEs. Standalone debuggers like GDB are also used, and these often provide less of a visual environment, usually using a command line. Some text editors such as Emacs allow GDB to be invoked through them, to provide a visual environment.

Programming languages

[edit]Different programming languages support different styles of programming (called programming paradigms). The choice of language used is subject to many considerations, such as company policy, suitability to task, availability of third-party packages, or individual preference. Ideally, the programming language best suited for the task at hand will be selected. Trade-offs from this ideal involve finding enough programmers who know the language to build a team, the availability of compilers for that language, and the efficiency with which programs written in a given language execute. Languages form an approximate spectrum from "low-level" to "high-level"; "low-level" languages are typically more machine-oriented and faster to execute, whereas "high-level" languages are more abstract and easier to use but execute less quickly. It is usually easier to code in "high-level" languages than in "low-level" ones. Programming languages are essential for software development. They are the building blocks for all software, from the simplest applications to the most sophisticated ones.

Allen Downey, in his book How To Think Like A Computer Scientist, writes:

- The details look different in different languages, but a few basic instructions appear in just about every language:

- Input: Gather data from the keyboard, a file, or some other device.

- Output: Display data on the screen or send data to a file or other device.

- Arithmetic: Perform basic arithmetical operations like addition and multiplication.

- Conditional Execution: Check for certain conditions and execute the appropriate sequence of statements.

- Repetition: Perform some action repeatedly, usually with some variation.

Many computer languages provide a mechanism to call functions provided by shared libraries. Provided the functions in a library follow the appropriate run-time conventions (e.g., method of passing arguments), then these functions may be written in any other language.

Learning to Program

[edit]Learning to program has a long history related to professional standards and practices, academic initiatives and curriculum, and commercial books and materials for students, self-taught learners, hobbyists, and others who desire to create or customize software for personal use. Since the 1960s, learning to program has taken on the characteristics of a popular movement, with the rise of academic disciplines, inspirational leaders, collective identities, and strategies to grow and institutionalize change.[26] Through these social ideals and educational agendas, learning to code has become important not just for scientists and engineers, but for millions of citizens who have come to believe that creating software is beneficial to society and its members.

Context

[edit]In 1957, there were approximately 15,000 computer programmers employed in the U.S., a figure that accounts for 80% of the world’s active developers. In 2014, there were approximately 18.5 million professional programmers in the world, of which 11 million can be considered professional and 7.5 million student or hobbyists.[27] Before the rise of the commercial Internet in the mid-1990s, most programmers learned about software construction through books, magazines, user groups, and informal instruction methods, with academic coursework and corporate training playing important roles for professional workers.[28]

The first book containing specific instructions about how to program a computer may have been Maurice Wilkes, David Wheeler, and Stanley Gill's Preparation of Programs for an Electronic Digital Computer (1951). The book offered a selection of common subroutines for handling basic operations on the EDSAC, one of the world’s first stored-program computers.

When high-level languages arrived, they were introduced by numerous books and materials that explained language keywords, managing program flow, working with data, and other concepts. These languages included FLOW-MATIC, COBOL, FORTRAN, ALGOL, Pascal, BASIC, and C. An example of an early programming primer from these years is Marshal H. Wrubel's A Primer of Programming for Digital Computers (1959), which included step-by-step instructions for filling out coding sheets, creating punched cards, and using the keywords in IBM’s early FORTRAN system.[29] Daniel McCracken's A Guide to FORTRAN Programming (1961) presented FORTRAN to a larger audience, including students and office workers.

In 1961, Alan Perlis suggested that all university freshmen at Carnegie Technical Institute take a course in computer programming.[30] His advice was published in the popular technical journal Computers and Automation, which became a regular source of information for professional programmers.

Programmers soon had a range of learning texts at their disposal. Programmer’s references listed keywords and functions related to a language, often in alphabetical order, as well as technical information about compilers and related systems. An early example was IBM’s Programmers’ Reference Manual: the FORTRAN Automatic Coding System for the IBM 704 EDPM (1956).

Over time, the genre of programmer’s guides emerged, which presented the features of a language in tutorial or step by step format. Many early primers started with a program known as “Hello, World”, which presented the shortest program a developer could create in a given system. Programmer’s guides then went on to discuss core topics like declaring variables, data types, formulas, flow control, user-defined functions, manipulating data, and other topics.

Early and influential programmer’s guides included John G. Kemeny and Thomas E. Kurtz’s BASIC Programming (1967), Kathleen Jensen and Niklaus Wirth’s The Pascal User Manual and Report (1971), and Brian Kernighan and Dennis Ritchie’s The C Programming Language (1978). Similar books for popular audiences (but with a much lighter tone) included Bob Albrecht’s My Computer Loves Me When I Speak BASIC (1972), Al Kelley and Ira Pohl’s A Book on C (1984), and Dan Gookin's C for Dummies (1994).

Beyond language-specific primers, there were numerous books and academic journals that introduced professional programming practices. Many were designed for university courses in computer science, software engineering, or related disciplines. Donald Knuth’s The Art of Computer Programming (1968 and later), presented hundreds of computational algorithms and their analysis. The Elements of Programming Style (1974), by Brian W. Kernighan and P. J. Plauger, concerned itself with programming style, the idea that programs should be written not only to satisfy the compiler but human readers. Jon Bentley’s Programming Pearls (1986) offered practical advice about the art and craft of programming in professional and academic contexts. Texts specifically designed for students included Doug Cooper and Michael Clancy's Oh Pascal! (1982), Alfred Aho’s Data Structures and Algorithms (1983), and Daniel Watt's Learning with Logo (1983).

Technical Publishers

[edit]As personal computers became mass-market products, thousands of trade books and magazines sought to teach professional, hobbyist, and casual users to write computer programs. A sample of these learning resources includes BASIC Computer Games, Microcomputer Edition (1978), by David Ahl; Programming the Z80 (1979), by Rodnay Zaks; Programmer’s CP/M Handbook (1983), by Andy Johnson-Laird; C Primer Plus (1984), by Mitchell Waite and The Waite Group; The Peter Norton Programmer’s Guide to the IBM PC (1985), by Peter Norton; Advanced MS-DOS (1986), by Ray Duncan; Learn BASIC Now (1989), by Michael Halvorson and David Rygymr; Programming Windows 3.1 (1992), by Charles Petzold; Code Complete: A Practical Handbook for Software Construction (1993), by Steve McConnell; and Tricks of the Game-Programming Gurus (1994), by André LaMothe.

The PC software industry spurred the creation of numerous book publishers that offered programming primers and tutorials, as well as books for advanced software developers.[31] These publishers included Addison-Wesley, IDG, Macmillan Inc., McGraw-Hill, Microsoft Press, O’Reilly Media, Prentice Hall, Sybex, Ventana Press, Waite Group Press, Wiley (publisher), Wrox Press, and Ziff-Davis.

Computer magazines and journals also provided learning content for professional and hobbyist programmers. A partial list of these resources includes Amiga World, Byte (magazine), Communications of the ACM, Computer (magazine), Compute!, Computer Language (magazine), Computers and Electronics, Dr. Dobb’s Journal, IEEE Software, Macworld, PC Magazine, PC/Computing, and UnixWorld.

Digital Learning / Online Resources

[edit]Between 2000 and 2010, computer book and magazine publishers declined significantly as providers of programming instruction, as programmers moved to Internet resources to expand their access to information. This shift brought forward new digital products and mechanisms to learn programming skills. During the transition, digital books from publishers transferred information that had traditionally been delivered in print to new and expanding audiences.[32]

Important Internet resources for learning to code included blogs, wikis, videos, online databases, subscription sites, and custom websites focused on coding skills. New commercial resources included YouTube videos, Lynda.com tutorials (later LinkedIn Learning), Khan Academy, Codecademy, GitHub, and numerous coding bootcamps.

Most software development systems and game engines included rich online help resources, including integrated development environments (IDEs), context-sensitive help, APIs, and other digital resources. Commercial software development kits (SDKs) also provided a collection of software development tools and documentation in one installable package.

Commercial and non-profit organizations published learning websites for developers, created blogs, and established newsfeeds and social media resources about programming. Corporations like Apple, Microsoft, Oracle, Google, and Amazon built corporate websites providing support for programmers, including resources like the Microsoft Developer Network (MSDN). Contemporary movements like Hour of Code (Code.org) show how learning to program has become associated with digital learning strategies, education agendas, and corporate philanthropy.

Programmers

[edit]Computer programmers are those who write computer software. Their jobs usually involve:

- Prototyping

- Coding

- Debugging

- Documentation

- Integration

- Maintenance

- Requirements analysis

- Software architecture

- Software testing

- Specification

Although programming has been presented in the media as a somewhat mathematical subject, some research shows that good programmers have strong skills in natural human languages, and that learning to code is similar to learning a foreign language.[33][34]

See also

[edit]- Code smell

- Computer networking

- Competitive programming

- Programming best practices

- Systems programming

References

[edit]- ^ Bebbington, Shaun (2014). "What is coding". Tumblr. Archived from the original on April 29, 2020. Retrieved March 3, 2014.

- ^ Bebbington, Shaun (2014). "What is programming". Tumblr. Archived from the original on April 29, 2020. Retrieved March 3, 2014.

- ^ Koetsier, Teun (2001). "On the prehistory of programmable machines: musical automata, looms, calculators". Mechanism and Machine Theory. 36 (5). Elsevier: 589–603. doi:10.1016/S0094-114X(01)00005-2.

- ^ Kapur, Ajay; Carnegie, Dale; Murphy, Jim; Long, Jason (2017). "Loudspeakers Optional: A history of non-loudspeaker-based electroacoustic music". Organised Sound. 22 (2). Cambridge University Press: 195–205. doi:10.1017/S1355771817000103. ISSN 1355-7718.

- ^ Fowler, Charles B. (October 1967). "The Museum of Music: A History of Mechanical Instruments". Music Educators Journal. 54 (2): 45–49. doi:10.2307/3391092. JSTOR 3391092. S2CID 190524140.

- ^ Noel Sharkey (2007), A 13th Century Programmable Robot, University of Sheffield

- ^ Dooley, John F. (2013). A Brief History of Cryptology and Cryptographic Algorithms. Springer Science & Business Media. pp. 12–3. ISBN 9783319016283.

- ^ Fuegi, J.; Francis, J. (2003). "Lovelace & Babbage and the Creation of the 1843 'notes'". IEEE Annals of the History of Computing. 25 (4): 16. doi:10.1109/MAHC.2003.1253887.

- ^ Rojas, R. (2021). "The Computer Programs of Charles Babbage". IEEE Annals of the History of Computing. 43 (1): 6–18. doi:10.1109/MAHC.2020.3045717.

- ^ Rojas, R. (2024). "The First Computer Program" (PDF). Communications of the ACM. 67 (6): 78–81. doi:10.1145/3624731.

- ^ da Cruz, Frank (March 10, 2020). "Columbia University Computing History – Herman Hollerith". Columbia University. Columbia.edu. Archived from the original on April 29, 2020. Retrieved April 25, 2010.

- ^ "Memory & Storage | Timeline of Computer History | Computer History Museum". www.computerhistory.org. Archived from the original on May 27, 2021. Retrieved June 3, 2021.

- ^ Ridgway, Richard (1952). "Compiling routines". Proceedings of the 1952 ACM national meeting (Toronto) on - ACM '52. pp. 1–5. doi:10.1145/800259.808980. ISBN 9781450379250. S2CID 14878552.

- ^ Maurice V. Wilkes. 1968. Computers Then and Now. Journal of the Association for Computing Machinery, 15(1):1–7, January. p. 3 (a comment in brackets added by editor), "(I do not think that the term compiler was then [1953] in general use, although it had in fact been introduced by Grace Hopper.)"

- ^ [1] The World's First COBOL Compilers Archived 13 October 2011 at the Wayback Machine

- ^ a b Bergstein, Brian (March 20, 2007). "Fortran creator John Backus dies". NBC News. Archived from the original on April 29, 2020. Retrieved April 25, 2010.

- ^ "NIST To Develop Cloud Roadmap". InformationWeek. November 5, 2010.

Computing initiative seeks to remove barriers to cloud adoption in security, interoperability, portability and reliability.

- ^ "What is it based on". Computerworld. April 9, 1984. p. 13.

Is it based on ... Reliability Portability. Compatibility

- ^ "Programming 101: Tips to become a good programmer - Wisdom Geek". Wisdom Geek. May 19, 2016. Archived from the original on May 23, 2016. Retrieved May 23, 2016.

- ^ Fundamentals of Software Architecture: An Engineering Approach. O'Reilly Media. 2020. ISBN 978-1492043454.

- ^ Elshoff, James L.; Marcotty, Michael (1982). "Improving computer program readability to aid modification". Communications of the ACM. 25 (8): 512–521. doi:10.1145/358589.358596. S2CID 30026641.

- ^ Multiple (wiki). "Readability". Docforge. Archived from the original on April 29, 2020. Retrieved January 30, 2010.

- ^ Enticknap, Nicholas (September 11, 2007). "SSL/Computer Weekly IT salary survey: finance boom drives IT job growth". Archived from the original on October 26, 2011. Retrieved June 24, 2009.

- ^ Mitchell, Robert (May 21, 2012). "The Cobol Brain Drain". Computer World. Archived from the original on February 12, 2019. Retrieved May 9, 2015.

- ^ "Photograph courtesy Naval Surface Warfare Center, Dahlgren, Virginia, from National Geographic Sept. 1947". July 15, 2020. Archived from the original on November 13, 2020. Retrieved November 10, 2020.

- ^ Halvorson, Michael J. (2020). Code Nation: Personal Computing and the Learn to Program Movement in America. New York, NY: ACM Books. pp. 3–6.

- ^ 2014 Worldwide Software Developer and ICT-Skilled Worker Estimates. Framingham, MA: International Data Corporation. 2014.

- ^ Ensmenger, Nathan (2010). The Computer Boys Take Over: Computers, Programmers, and the Politics and Technical Expertise. Cambridge, MA: The MIT Press.

- ^ Halvorson, Michael J. (2020). Code Nation: Personal Computing and the Learn to Program Movement in America. New York, NY: ACM Books. p. 80.

- ^ Perlis, Alan (1961). "The role of the digital computer in the university". Computers and Automation 10, 4 and 4B. pp. 10–15.

- ^ Halvorson, Michael J. (2020). Code Nation: Personal Computing and the Learn to Program Movement in America. New York, NY: ACM Books. p. 352.

- ^ Halvorson, Michael J. (2020). Code Nation: Personal Computing and the Learn to Program Movement in America. New York, NY: ACM Books. pp. 365–368.

- ^ Prat, Chantel S.; Madhyastha, Tara M.; Mottarella, Malayka J.; Kuo, Chu-Hsuan (March 2, 2020). "Relating Natural Language Aptitude to Individual Differences in Learning Programming Languages". Scientific Reports. 10 (1): 3817. Bibcode:2020NatSR..10.3817P. doi:10.1038/s41598-020-60661-8. ISSN 2045-2322. PMC 7051953. PMID 32123206.

- ^ "To the brain, reading computer code is not the same as reading language". MIT News | Massachusetts Institute of Technology. December 15, 2020. Retrieved July 29, 2023.

Sources

[edit]- Ceruzzi, Paul E. (1998). History of Computing. Cambridge, Massachusetts: MIT Press. ISBN 9780262032551 – via EBSCOhost.

- Evans, Claire L. (2018). Broad Band: The Untold Story of the Women Who Made the Internet. New York: Portfolio/Penguin. ISBN 9780735211759.

- Gürer, Denise (1995). "Pioneering Women in Computer Science" (PDF). Communications of the ACM. 38 (1): 45–54. doi:10.1145/204865.204875. S2CID 6626310. Archived (PDF) from the original on October 9, 2022.

- Smith, Erika E. (2013). "Recognizing a Collective Inheritance through the History of Women in Computing". CLCWeb: Comparative Literature & Culture: A WWWeb Journal. 15 (1): 1–9 – via EBSCOhost.

Further reading

[edit]- A.K. Hartmann, Practical Guide to Computer Simulations, Singapore: World Scientific (2009)

- A. Hunt, D. Thomas, and W. Cunningham, The Pragmatic Programmer. From Journeyman to Master, Amsterdam: Addison-Wesley Longman (1999)

- Brian W. Kernighan, The Practice of Programming, Pearson (1999)

- Weinberg, Gerald M., The Psychology of Computer Programming, New York: Van Nostrand Reinhold (1971)

- Edsger W. Dijkstra, A Discipline of Programming, Prentice-Hall (1976)

- O.-J. Dahl, E.W.Dijkstra, C.A.R. Hoare, Structured Programming, Academic Press (1972)

- David Gries, The Science of Programming, Springer-Verlag (1981)

External links

[edit] Media related to Computer programming at Wikimedia Commons

Media related to Computer programming at Wikimedia Commons Quotations related to Programming at Wikiquote

Quotations related to Programming at Wikiquote